What are Big Data, Hadoop & Spark ? What is the relationship among them ?

Awantik Das

Awantik Das

Big Data - Big Data is a problem statement & what it means is the size of data under process has grown to 100's of petabytes ( 1 PB = 1000TB ). Yahoo mail generates some 40-50 PB of data every day. Yahoo has to read that 40-50 PB of data & filter out spans. E-commerce websites generate logs for each shopping & window-shopping. Trust me, window-shopping data are more important for business. Retail store - which products to place next to each other. Processing bills of all buyers & finding relations between each product. Classic case of - Beer & Diaper falls under this category. Machine learning can produce fantastic results if a large amount of data can be processed.

Hadoop - This is a solution to big data problems. It provides distributed storage & distributed computing framework. Distributed Storage - By 2015, you can store max. upto 16 TB in one system. If the data size is beyond 16 TB, usually it's in order of PetaBytes. The only way to store such huge amount of data is breaking the data into chunks & storing in distributed systems. Distributed Processing - Data is stored in physically different systems, we can make happen parallel processing on each of the nodes ( system ) & thus leveraging computation power of each node. Hadoop consists of 3 major things - HDFS, YARN & MapReduce. HDFS is all about the distributed file system, it breaks down the data & maps node for each data request. MapReduce - This defines the functions - mapper & reducer. Mapper - Function that executes on each node. Provides parallelism to the application. Reducer - The piece of code which cannot be parallelized is done by reducer & it executes in only one node. YARN picks the function and executes on each node.

Spark - On current scenario, Hadoop mostly focuses on storing large scale of data. We can see Spark as something which can do a fast computation.We generally see Hadoop as underlying storage infrastructure. Spark as a superfast computation framework usually 10X - 50X faster. Apart from Hadoop, Spark can use other technologies to provide distributed storage framework

Keywords : spark data-science hadoop

Recommended Reading

Practical use cases of AI in Business

Artificial Intelligence is one of the most fascinating and bears much of the future in it. In fact, AI is the future of technology and businesses. Artificial intelligence with allied technologies like machine learning makes it possible for machines to impro...

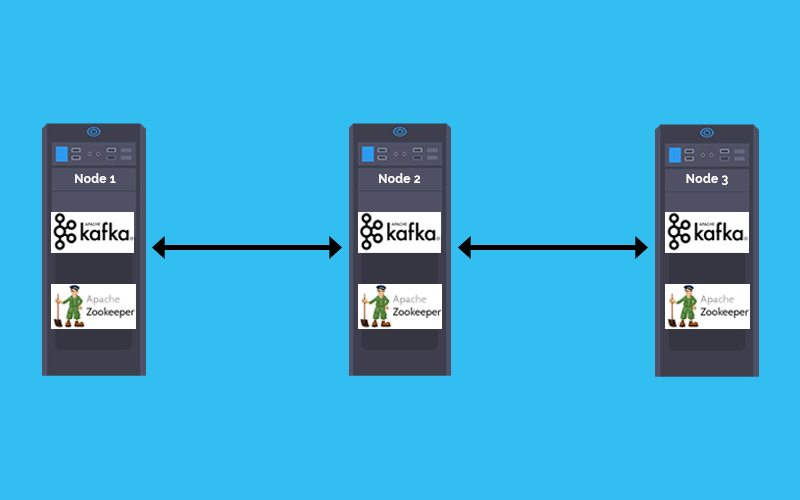

How to deploy Kafka and Zookeeper cluster on Kubernetes

In a Microservices based architecture message -broker plays a crucial role in inter-service communication. The combination of Kafka and zookeeper is one of the most popular message broker. This tutorial explains how to Deploy Kafka and zookeeper stateful se...

Big Data Processing with Deep Learning - Spark vs TensorFlow

More and more organizations are integrating big data pipeline with deep learning infrastructure. This is something, Spark & TensorFlow folks have in mind as well. Let’s have a quick glance about their journey so far.

Why Learning Docker Containers is so important in IT industry?

Containerization/Dockerization is a way of packaging your application and run it in an isolated manner so that it does not matter in which environment it is being deployed. In the DevOps ecosystem containers are being used at large scale. and this is just...

Mass layoffs in IT Majors – speculations - facts - and the future ahead !

If you are an IT professional in India this may scare you a bit. Hearsay is Indian IT services companies are on a firing spree. A significant number of layoffs have prominently been made public from companies that include big names like Cognizant, IBM, Info...

Top 3 Applications of Apache Spark

Distributed computation got with induction of Apache Spark in Big Data Space. Lightning performance, ease of integration, abstraction of inner complexity & programmable using Python, Scala, Java & R makes it one of the . Here we are discussing most widely ...

How can I become a data scientist from an absolute beginner level to an advanced level?

This is the question that every budding Data Scientist asks himself while starting on the tedious yet adventurous journey to the world of Data Science. Other than struggling with the factors like self-doubt the beginners look for the Effective Learning Path...

How can one explain the concept of Apache Spark in layman's terms?

Data needs computation to get some information out. Size of data can be really huge. Huge data is broken down into chunks & stored across different systems.