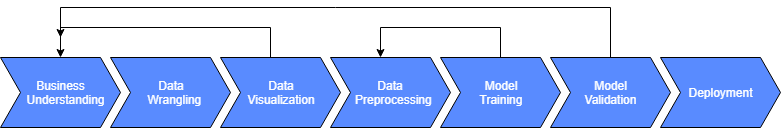

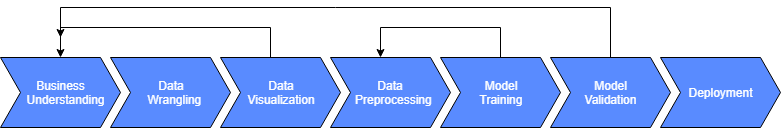

Starting from development to deployment of machine learning, the journey of a product can be broken down to 7 important stages:

1. Identify Business Problem: Every successful product in the market place is there to solve some problem. There is no product without purpose. Identify the problem you or your team are planning to solve. Now ask yourself these questions: Do you need machine learning for that? If yes, do you have data for machine learning? Without data, machine learning is not possible. If there is possibility of data generation, you might have to focus on data gathering. Identify what information are you planning to gather & setting up the right infrastructure for that.

2. Data Wrangling: If you have reached this stage, in all likelihood you have identified the problem statement you are trying to solve & have a data-gathering system in place. Let's embrace the reality that without any processing, data will never be in a state that would make sense. The activities involved now are cleaning, handling missing values, restructuring & reshaping. All these activities are known as data wrangling. Depending on the scale of data, different tools & libraries are utilized. For small scale data, pandas (python library) is enough & for large scale, we might have to make use of Spark (Big Data Fast Computing Framework). Usually, 60%-70% of the entire machine learning product development life-cycle is spent doing data wrangling.

3. Visualization: Once you have consolidated data, building graphical visualization can unearth interesting insights. These insights provide a good description of the data. They can also help identify the root cause of some events. Like sales dipped because of season etc. Many open-source libraries are there to make visualization easy & free like seaborn, matplotlib. There are also enterprise-level paid products like Tabluea for this. There are dashboards for live data. Visualization of complex information in a comprehensive way is an art.

4. Data Preprocessing: So far you have extracted knowledge out of data. Rather than limiting your data to descriptive analysis, you want to predict using machine learning, although machine learning is not limited to prediction. Machine Learning algorithms have certain expectations from data & data pre-processing transform data to formats expected by machine learning algorithms. Text is not understood by machine learning algorithms, it needs to be transformed into numbers. The same is applicable for images & videos as well. Libraries like scikit, pandas can help us do it on a small scale. Again, Spark can help in large scale data pre-processing.

5. Model Training: Once data is pre-processed, it is ready to be fed to machine learning algorithms to create machine learning models, which will be capable of doing predictions. The challenge here is to identify the right machine learning algorithm among a plethora of possibilities. Various types of algorithms are there - linear models, tree-based models, neural networks etc. There are advanced learning algorithms suited for unstructured data like text & images, called deep learning algorithms. scikit is a small scale machine learning library. TensorFlow is a very popular deep learning library. Spark supports machine learning at scale for large volume of data.

6. Model Validation: Among all the trained models, identifying the right configuration parameter is a matter of a lot of computation. Also, the model should be validated against unseen data for identifying generalized performance. Good infrastructure can save experimentation time of data scientists. A skilled data scientist will optimize experimentation and yield result, faster.

7. Deployment: The applicable model will have to be deployed for use in production. Models are usually deployed behind REST interface. This also needs to be elastic (ability to scale up & down infrastructure) to address fluctuating demand of REST API invoke. DevOps optimizes development & deployment.

Most of the product in deployment can be mapped to this machine learning pipeline.

Recommended Reading

Practical use cases of Blockchain

The decentralized blockchain technology is going to shift your life from the way you transact business or manage possession, to the manner you use your devices, vote, rent a car, and so on. Along the way, it will revamp banks and other financial organizatio...

Awantik Das

Awantik Das