Big Data Processing with PySpark Training

Big Data Processing with PySpark Course:

PySpark is an API developed in python for spark programming and writing spark applications in Python. PySpark allows data scientists to perform rapid distributed transformations on large sets of data. Apache Spark is open source and uses in-memory computation. It can run tasks up to 100 times faster,when it utilizes the in-memory computations and 10 times faster when it uses disk than traditional map-reduce tasks.

Big Data Processing with PySpark Course Curriculum

What is Apache Spark?

Spark Jobs and APIs

Resilient Distributed Dataset

Datasets

Project Tungsten

Unifying Datasets and DataFrames

Tungsten phase 2

Continuous applications

Internal workings of an RDD

Schema

Lambda expressions

Transformations

Transformations

Math/Statistical transformations

Data structure-based transformations

flatMap function

coalesce

Actions -reduce,count,collect,Caching

Loading data

wholeTextFiles

Saving RDD

Python to RDD communications

Speeding up PySpark with DataFrames

Generating our own JSON data

Creating a temporary table

DataFrame API query

Interoperating with RDDs

Programmatically specifying the schema

Number of rows

Querying with SQL

Running filter statements using the where Clauses

Preparing the source datasets

Visualizing our flight-performance data

Checking for duplicates, missing observations, and outliers

Missing observations

Getting familiar with your data

Correlations

Histograms

Solving cases

Overview of the package

Getting to know your data

Correlations

Creating the final dataset

Splitting into training and testing

Logistic regression in MLlib

Random forest in MLlib

Overview of the package

Estimators

Regression

Pipeline

Loading the data

Creating an estimator

Fitting the model

Saving the model

Grid search

Other features of PySpark ML in action

Discretizing continuous variables

Classification

Finding clusters in the births dataset

What is Spark Streaming?

What is the Spark Streaming application data flow?

Introducing Structured Streaming

The spark-submit command

Deploying the app programmatically

Creating SparkSession

Structure of the module

User defined functions in Spark

Monitoring execution

Frequently Asked Questions

This "Big Data Processing with PySpark" course is an instructor-led training (ILT). The trainer travels to your office location and delivers the training within your office premises. If you need training space for the training we can provide a fully-equipped lab with all the required facilities. The online instructor-led training is also available if required. Online training is live and the instructor's screen will be visible and voice will be audible. Participants screen will also be visible and participants can ask queries during the live session.

Participants will be provided "Big Data Processing with PySpark"-specific study material. Participants will have lifetime access to all the code and resources needed for this "Big Data Processing with PySpark". Our public GitHub repository and the study material will also be shared with the participants.

All the courses from zekeLabs are hands-on courses. The code/document used in the class will be provided to the participants. Cloud-lab and Virtual Machines are provided to every participant during the "Big Data Processing with PySpark" training.

The "Big Data Processing with PySpark" training varies several factors. Including the prior knowledge of the team on the subject, the objective of the team learning from the program, customization in the course is needed among others. Contact us to know more about "Big Data Processing with PySpark" course duration.

The "Big Data Processing with PySpark" training is organised at the client's premises. We have delivered and continue to deliver "Big Data Processing with PySpark" training in India, USA, Singapore, Hong Kong, and Indonesia. We also have state-of-art training facilities based on client requirement.

Our Subject matter experts (SMEs) have more than ten years of industry experience. This ensures that the learning program is a 360-degree holistic knowledge and learning experience. The course program has been designed in close collaboration with the experts working in esteemed organizations such as Google, Microsoft, Amazon, and similar others.

Yes, absolutely. For every training, we conduct a technical call with our Subject Matter Expert (SME) and the technical lead of the team that undergoes training. The course is tailored based on the current expertise of the participants, objectives of the team undergoing the training program and short term and long term objectives of the organisation.

Drop a mail to us at [email protected] or call us at +91 8041690175 and we will get back to you at the earliest for your queries on "Big Data Processing with PySpark" course.

Recommended Courses

Apache Cassandra

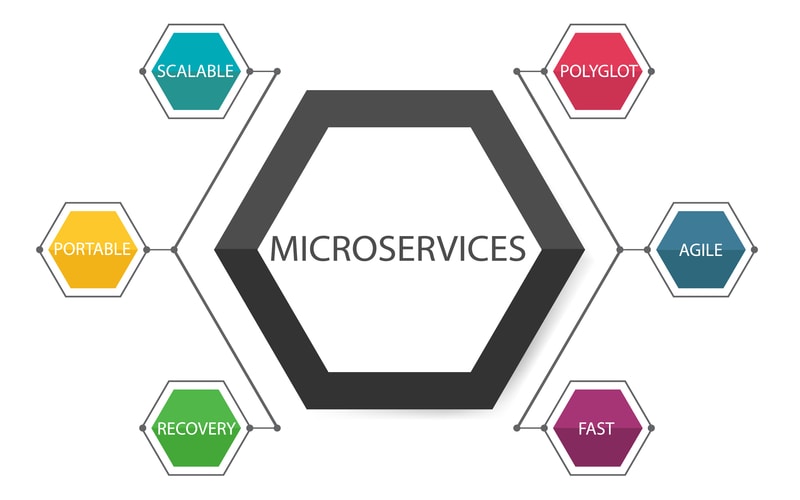

Microservices

Apache Cassandra

Feedback